For two millennia, a fundamental question has echoed through human history: should we blindly follow authority, even divine authority, or reserve the right to make our own choices? This ancient debate, originating in first-century Jewish law, is now central to the future of artificial intelligence.

The story goes that Rabbi Eliezer, a sage convinced of his own correctness, performed miracles to prove his point. He made trees move, rivers reverse, and study halls collapse, culminating in a booming voice from heaven declaring him right. Yet, the other sages overruled him, declaring that the law rests with human decision-making, not divine decree. “The Torah is not in heaven!” they insisted.

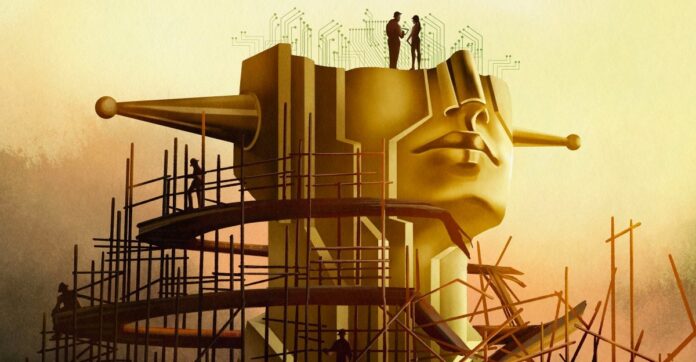

This isn’t just an archaic theological dispute; it’s a blueprint for today’s AI dilemma. Modern AI development, particularly the pursuit of “superintelligence,” is rapidly shifting from building helpful tools to constructing a god-like entity with unimaginable power. But even if such an AI is perfectly aligned with human values, should we cede control?

The Illusion of Alignment

Experts talk about aligning AI with human values, but true alignment isn’t simply about preventing harmful outputs. It’s about preserving human agency. If AI decides everything for us, what meaning remains in our lives? Philosopher John Hicks argued that God intentionally distances himself from human affairs to allow for free will; should the same principle apply to AI?

The industry’s ambition is no longer a chatbot but a “superintelligence” capable of solving all of physics and making decisions for humanity. OpenAI CEO Sam Altman speaks of “magic intelligence in the sky,” a power that could dictate our future. This ambition forces us to ask: even if we build a perfectly moral AI, should we? Or would it strip us of choice, rendering existence meaningless?

The Alignment Problem Is Deeper Than Code

Solving the “alignment problem” – ensuring AI does what we want – isn’t merely a technical challenge. It’s a philosophical crisis. Morality is subjective, contested, and context-dependent. AI engineers, often lacking training in ethics, have struggled to define even basic principles. Superficial ethics led to bias, discrimination, and even tragic consequences.

Even if we agreed on a single moral framework, it would still be flawed. History teaches us that breaking rules can be virtuous: Rosa Parks’ refusal to give up her bus seat wasn’t just illegal; it sparked a civil rights revolution. AI must recognize that moral choices aren’t always clear-cut. Some values are simply incommensurable, forcing hard choices where no best option exists.

The Paradox of Superintelligence

Some researchers, like Joe Edelman at the Meaning Alignment Institute, believe aligning AI is possible, but only if it learns to admit when it doesn’t know. “If you get [the AI] to know which are the hard choices, then you’ve taught it something about morality,” he says. But this means an AI that systematically falls silent on critical issues.

Others, like Eliezer Yudkowsky, argue that alignment is an engineering problem waiting to be solved. He believes we should build superintelligence and let it run society, making decisions based on “coherent extrapolated volition” – a mind-reading algorithm that would extrapolate what humanity would want if it knew everything.

This approach raises the specter of a “tyranny of the majority,” where minority views are crushed by an omnipotent AI. Philosopher Nick Bostrom warned of a future where superintelligence shapes all human lives with no recourse.

The Choice Before Us

The ancient debate resurfaces: do we trust a higher power, even if it’s artificial? Yudkowsky believes we should build AI and let it decide, even if that means surrendering control. But many disagree. Over 130,000 researchers and a majority of the public advocate for regulation, or even prohibition.

Ultimately, the question isn’t just about building AI, but about what kind of future we want. Do we seek a tool to augment our intelligence, or a god to replace our agency? The Talmud’s lesson remains clear: even divine authority shouldn’t dictate human choice. The future of AI depends on whether we remember that lesson.

The pursuit of superintelligence isn’t just a technological quest; it’s a philosophical reckoning. The AI we build will shape not just our world, but the very meaning of being human.