For years, consumers have tracked their health data through wearables, from early Fitbits to advanced smart rings. Now, artificial intelligence is stepping in to analyze that data, offering “AI health coaches” from major tech companies like Google, Apple, and Samsung. But while these tools promise personalized insights, they also raise serious questions about privacy and effectiveness.

The Rise of AI in Personal Health

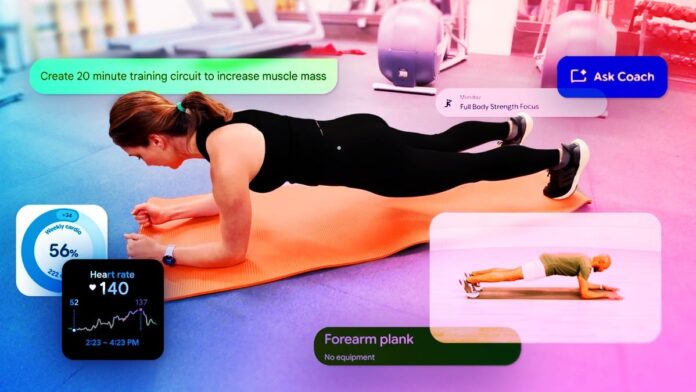

Wearable technology has always relied on AI, from heart-rate alerts to sleep scores. However, the latest generation of AI health coaches goes further, using generative AI similar to ChatGPT to provide dynamic, real-time advice on topics ranging from fitness to mood swings. This means handing over your most sensitive biometric data to algorithms that are still prone to errors and biases.

According to Karin Verspoor of RMIT University, early AI in wearables focused on “predictive modeling”—identifying patterns and surfacing alerts. Now, we’re entering a new era where AI is more “responsive” but also more unpredictable, potentially generating inaccurate or misleading information.

The Current Landscape: Promising, But Flawed

Over the past year, companies have rolled out AI-powered features across their devices. Google is testing an AI coach in Fitbit, Apple is exploring ChatGPT integration in its Health app, and Meta has partnered with Garmin and Oakley to embed voice assistants into smart glasses. The reality, however, falls short of the hype.

Current AI coaches offer mixed results. Some features, like Meta AI reading heart-rate data into your ear during workouts, are genuinely useful. Others, like Samsung’s generic training plans, feel half-baked. Most remain in their infancy, far from their potential as always-available health advisors.

The Potential Upside: Bridging Gaps in Healthcare

The U.S. healthcare system is strained, and AI could play a role in easing some pressure. Dr. Jonathan Chen of Stanford argues that AI can synthesize complex health data to flag warning signs of conditions like hypertension before they become life-threatening. Personalized insights could encourage behavioral changes and improve engagement with wellness.

AI can also fill gaps in care, particularly in communities with limited access to medical resources. One example: A family member received a real-time heart rhythm alert from an Apple Watch, leading to a timely diagnosis and procedure that potentially saved his life. The wearable didn’t replace medical care but enhanced it.

The Privacy Trade-Off: Your Data Is the Currency

The biggest concern is data privacy. Using AI health coaches often means signing away access to years of biometric data, medical history, and even location information. Companies collect this data to train their models, and the terms of use are often vague and difficult to understand.

Privacy analysis by the Electronic Privacy Information Center has shown that health-related data is frequently shared with third parties for advertising purposes, often outside HIPAA protections. Even anonymized data can be re-identified, and breaches or bankruptcies could expose sensitive information.

Navigating the Future: Caution and Awareness

The long-term impact of AI health coaches remains uncertain. They are unlikely to either revolutionize healthcare or trigger a privacy apocalypse. Instead, they will likely become another tool in the wellness ecosystem, requiring users to be vigilant about data sharing and critically evaluate the advice they receive.

Experts agree that AI should supplement, not replace, traditional healthcare. Consumers must carefully read privacy policies, understand how their data will be used, and weigh the benefits against the risks. The future of AI in health depends on responsible development and informed user choices.